In a bid to improve quality control and reduce production errors, manufacturers are increasingly adopting automated anomaly detection systems. These systems automatically identify defects in manufacturing products and are widely employed in various industries like automotive manufacturing, electronics, and pharmaceuticals.

Traditional anomaly detection systems rely on supervised learning, where models are trained on labeled datasets containing both normal and defective samples. However, in most industrial settings, defect data is usually scarce or highly variable, making these approaches impractical. As a result, unsupervised learning models that rely exclusively on defect-free data have emerged as a promising alternative.

Recently, embedding-based methods have achieved state-of-the-art results for unsupervised anomaly detection. Unfortunately, these methods rely on memory banks, resulting in substantial increases in memory consumption and inference times. This is problematic for industrial settings, which are often constrained in memory resources and processing speed. Additionally, these methods perform poorly in low-light or variable lighting conditions, which are common in manufacturing environments.

To address these challenges, a research team led by Associate Professor Phan Xuan Tan from the Innovative Global Program and College of Engineering at Shibaura Institute of Technology, Japan, and Dr. Hoang Dinh Cuong from FPT University, Vietnam, has developed an innovative unsupervised model for industrial anomaly detection (IAD) and localization using paired well-lit and low-light images.

“Our approach is the first to leverage paired well-lit and low-light images for detecting anomalies, while remaining lightweight and memory-efficient,” explains Dr. Tan. The team also included researchers from FPT University in Vietnam. Their study is published in the Journal of Computational Design and Engineering.

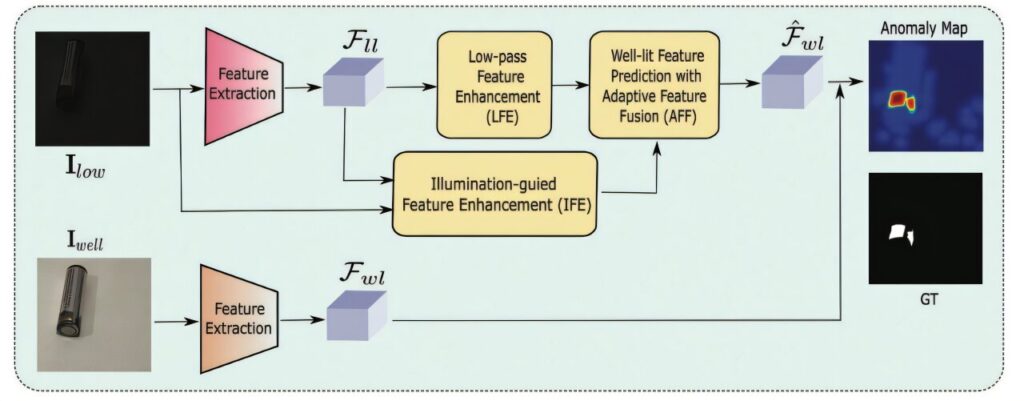

The proposed model first extracts feature maps for both well-lit and low-light images, capturing their structural and textural details. It then refines these features using two innovative modules. The Low-pass Feature Enhancement (LFE) module emphasizes low-frequency components of feature maps. In low-light environments, high-frequency features are often corrupted by noise, obscuring subtle surface details crucial for anomaly detection. By focusing on low-frequency features, the LFE module effectively suppresses noise in low-light images.

Low-light images also suffer from nonuniform lighting. The Illumination-aware Feature Enhancement module addresses this issue by generating an estimated illumination map to dynamically adjust features based on lighting variations. This ensures correct feature representation even in uneven lighting.

The outputs from these two modules are then integrated using an Adaptive Feature Fusion module, which captures their complementary features and enhances the most relevant details. It does so by employing channel-attention and spatial-attention mechanisms. The former ensures that only the relevant features are emphasized while the redundant ones are suppressed, and the latter preserves fine-grained details.

Anomalies are then detected by comparing the well-lit features reconstructed from the low-light inputs to the original extracted features. This produces an anomaly map for localizing defects and a global anomaly score for detection. This approach improves computational efficiency by minimizing reliance on memory-intensive feature embeddings, making it suitable for real-time industrial applications.

To evaluate their model, the researchers developed a new dataset called LL-IAD, where LL stands for low-light. It comprises 6,600 paired well-lit and low-light images across 10 object categories. They also tested their model on additional external industrial datasets, including Insulator and Clutch. The model significantly outperformed existing state-of-the-art methods across all datasets. Notably, even in the absence of real well-lit images, it maintained high detection accuracy, proving its practical applicability in challenging industrial environments.

“Our model offers a computationally efficient, memory-friendly, and robust solution for IAD in common low-light manufacturing conditions,” remarks Dr. Tan. “Additionally, LL-IAD is the first dataset specifically designed for IAD under low-light conditions, which we hope will serve as a valuable resource for future research.”

By introducing both an innovative anomaly detection framework and a comprehensive new dataset, this research addresses a key challenge in IAD, with potential benefits across diverse industries.

More information:

Dinh-Cuong Hoang et al, Unsupervised industrial anomaly detection using paired well-lit and low-light images, Journal of Computational Design and Engineering (2025). DOI: 10.1093/jcde/qwaf043

Citation:

Computationally efficient anomaly detection achieved through novel dual-lighting model (2025, July 31)

retrieved 1 August 2025

from https://techxplore.com/news/2025-07-efficient-anomaly-dual.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.