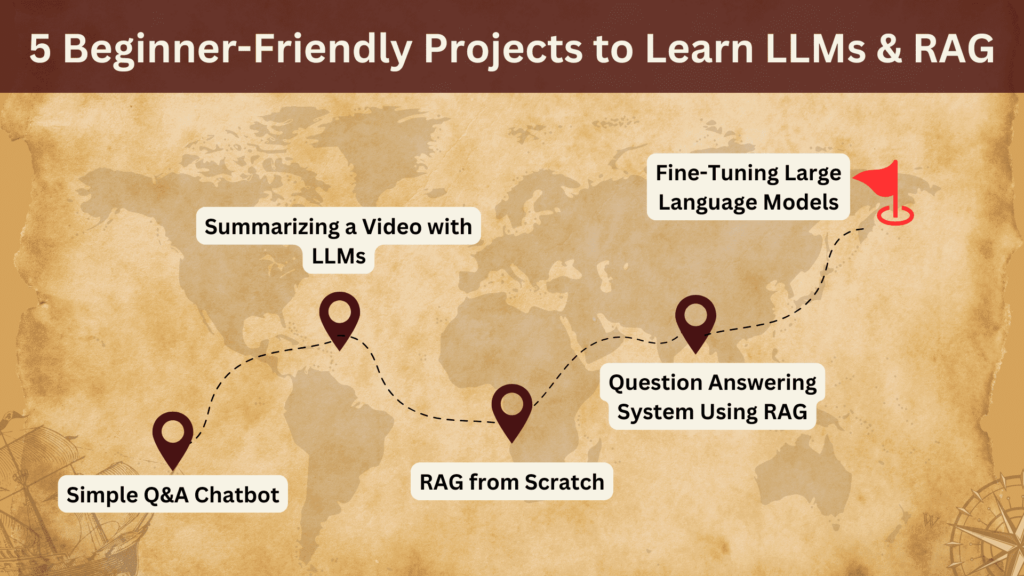

5 Beginner-Friendly Projects to Learn LLMs & RAG

Image by Author | Canva

I believe in the ‘learning by doing’ approach—you learn more this way. However, as a beginner, you need to be careful not to overwhelm yourself by jumping into a complex project too soon. To help you get comfortable working with LLMs and RAG, I’ll be sharing 5 different projects that are perfect for beginners. The projects are arranged in order of complexity, starting with a simple API call and gradually progressing to fine tuning large language models on your own custom dataset. So, let’s get started!

1. Building a Simple Q&A Chatbot using the GPT-4 API

Tutorial by: Tom Chant

This project will guide you through creating a smart chatbot called “KnowItAll” using the GPT-4 API. The bot can answer questions, generate content, translate text, and even write code. The tutorial walks you through everything you need to know, from setting up a web interface with HTML, CSS, and vanilla JavaScript to connecting the OpenAI API to build a conversational AI.

What You’ll Learn

- How to set up an interactive chatbot interface.

- Handling conversation context with arrays for dynamic interactions.

- Making API calls to OpenAI and using GPT-4 models.

2. Summarizing a Video with LLMs

Tutorial by: Agnieszka Mikołajczyk-Bareła

In this quick tutorial, you’ll learn how to summarize YouTube videos using LLMs and Automatic Speech Recognition (ASR) transcriptions. The tutorial shows you how to grab video subtitles, fix punctuation with the Rpunct library, and send the cleaned-up text to OpenAI models to summarize or answer questions.

What You’ll Learn

- Using the YouTube Transcript API to extract video subtitles.

- How to improve readability by fixing punctuation in ASR transcriptions.

- Querying OpenAI models to summarize or answer questions based on video content.

3. Building Retrieval Augmented Generation (RAG) from Scratch

Tutorial by: Mahnoor Nauyan

LLMs can have trouble reasoning with private or newly generated data. This tutorial shows you how to build a RAG solution from scratch—without relying on libraries like LangChain or Llama. You’ll walk through the whole process, from document processing and chunking to generating embeddings and using similarity-based retrieval.

What You’ll Learn

- What RAG is and how it’s useful for working with private data.

- Chunking documents into manageable sizes for indexing.

- Using cosine similarity for relevant data retrieval.

- How to connect the retrieved data with LLMs for more accurate, context-aware responses.

4. Building Your Own Question Answering System Using RAG

Tutorial by: Abhirami VS

This project shows you how to improve the accuracy and relevance of answers generated by LLMs using Retrieval-Augmented Generation (RAG). By bringing in external data sources, RAG helps reduce issues like hallucination and bias, making it perfect for domain-specific tasks. The tutorial uses open-source tools like Mistral-7B for generating responses and Chroma vector store for retrieving relevant data.

What You’ll Learn

- Setting up a RAG pipeline with external knowledge sources.

- Using vector stores like Chroma for data retrieval.

- How to use open-source LLMs like Mistral-7B to generate accurate answers.

5. Fine-Tuning Large Language Models (LLMs) with QLoRA

Tutorial by: Sumit Das

This tutorial shows you how to fine-tune pre-trained LLMs for specific tasks using QLoRA (Quantized Low-Rank Adaptation). The method focuses on being memory-efficient, so you can customize models without needing a lot of resources. The project uses the Phi-2 model and walks you through dataset prep, training a LoRA adapter, and evaluating your results.

What You’ll Learn

- Setting up and fine-tuning LLMs using QLoRA for custom datasets.

- Using HuggingFace libraries for tokenization, training, and evaluation.

- How to evaluate fine-tuned models using metrics like ROUGE.

By going through these projects in order, you’ll slowly build your confidence and get the hang of working with LLMs and RAG. From chatbots to video summarization to creating complex retrieval systems, each project will push your skills forward and set you up for more advanced AI challenges down the road.