In context: Teaching robots new skills has traditionally been slow and painstaking, requiring hours of step-by-step demonstrations for even the simplest tasks. If a robot encountered something unexpected, like dropping a tool or facing an unanticipated obstacle, its progress would often grind to a halt. This inflexibility has long limited the practical use of robots in environments where unpredictability is the norm.

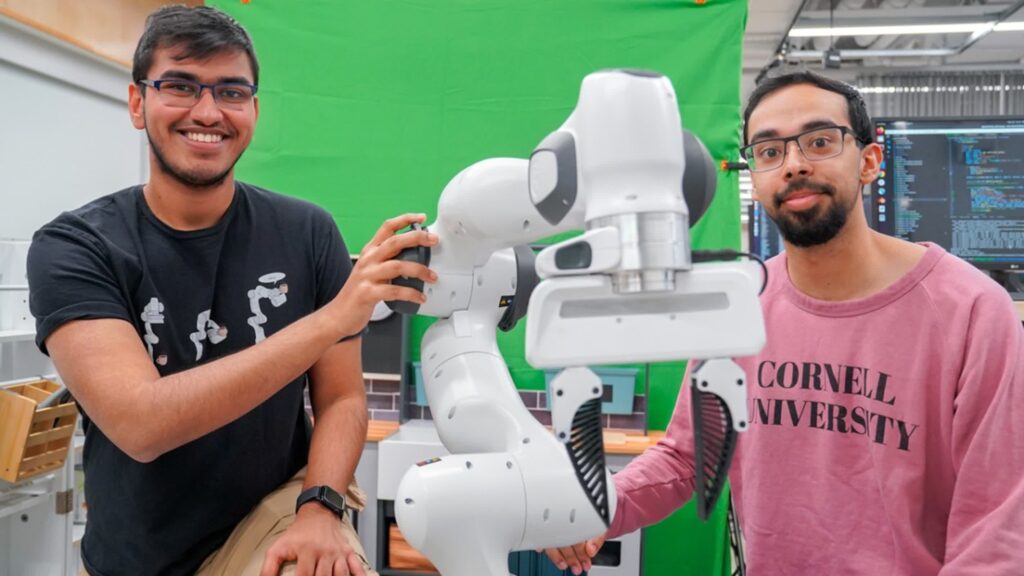

Researchers at Cornell University are now charting a new course with RHyME, an artificial intelligence framework that dramatically streamlines robot learning. An acronym for Retrieval for Hybrid Imitation under Mismatched Execution, RHyME enables robots to pick up new skills by watching a single demonstration video. This is a sharp departure from the exhaustive data collection and flawless repetition previously required for skill acquisition.

The key advance with RHyME is its ability to overcome the challenge of translating human demonstrations into robotic actions. While humans naturally adapt their movements to changing circumstances, robots have historically needed rigid, perfectly matched instructions to succeed. Even slight differences between how a person and a robot perform a task could derail the learning process.

RHyME tackles this problem by allowing robots to tap into a memory bank of previously observed actions. When shown a new demonstration, such as placing a mug in a sink, the robot searches its stored experiences for similar actions, like picking up a cup or putting down an object. The robot can figure out how to perform the new task by piecing together these familiar fragments, even if it has never seen that exact scenario.

This approach makes robot learning more flexible and vastly more efficient. RHyME requires only about 30 minutes of robot-specific training data, compared to the thousands of hours demanded by earlier methods. In laboratory tests, robots using RHyME completed tasks over 50 percent more successfully than those trained with traditional techniques.

The research team, led by doctoral student Kushal Kedia and assistant professor Sanjiban Choudhury, will present their findings at the upcoming IEEE International Conference on Robotics and Automation in Atlanta. Their collaborators include Prithwish Dan, Angela Chao, and Maximus Pace. The project has received support from Google, OpenAI, the US Office of Naval Research, and the National Science Foundation.