Prompt Engineering Patterns for Successful RAG Implementations

Image by Author | Ideogram

You know it as well as I do: people are relying more and more on generative AI and large language models (LLM) for quick and easy information acquisition. These models return often-impressive generated text by passing a simple instruction prompt. However, sometimes the outputs are inaccurate or irrelevant to the prompt. This is where the idea for retrieval acquisition and generation techniques came from.

RAG, or retrieval-augmented generation, is a technique that utilizes external knowledge to improve an LLM’s generated results. By building a knowledge base that contains exhaustive relevant information for all of your use cases, RAG can retrieve the most relevant info to provide additional context for the generation model.

One crucial part of RAG is the prompting, either from within the retrieval portion for acquiring the relevant information, or from within the generation portion, where we pass the context to generate the text. That’s why it is essential to manage the prompts correctly — so that RAG can provide the best, most relevant possible output.

This article will explore various prompt engineering methods to improve your RAG results.

Retrieval Prompt

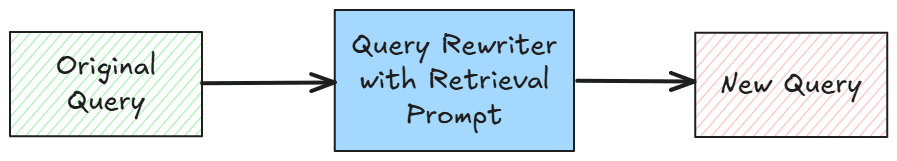

In the RAG structure, the retrieval prompt’s role is to improve the query for the retrieval process. It does not necessarily need to be present, as the retrieval prompt is usually used for query enhancement, such as rewriting. Often, a simple RAG implementation will only pass the crude query to perform retrieval from the knowledge base. This is why the retrieval prompt is present: to enhance the query.

Let’s start exploring them one by one.

Query Expansion

Query expansion, like its name, prompts you to expand the current query used for retrieval. The goal is to enhance the query to retrieve more relevant documents by rewriting it with much better wording.

We can expand the query by asking the LLM to rewrite it to generate synonyms or add contextual keywords using domain expertise. Here is an example prompt to expand the current query.

“Expand the query {query} into 3 search-friendly versions using synonyms and related terms. Prioritize technical terms from {domain}.”

We can use the model to expand the current query using prompts specific to your use cases. You can even add much more detail with prompt examples you know work well.

Contextual Continuity

If the user already has a history using the system, we can add the previous history iteration to the current query. We can use the conversation history to refine the retrieval query.

The technique is to refine the query, assuming that the conversation history is also essential for the following query. This technique is very use case-dependent, as there are many cases in which the history might not be necessary to the subsequent query that the user inputs.

For example, the prompt can be written as the following:

“Based on the user’s previous history query about {history} rewrite their new query: {new query} into a standalone search query.”

You can tweak the query further by adding more query structure or asking for a summary of the previous history before adding it to the contextual continuity prompt.

Hypothetical Document Embeddings (HyDE)

Hypothetical document embeddings (HyDE) is a query enhancement technique that improves the query by generating hypothetical answers. In this technique, we ask the LLM to think about the ideal output from the query, and we use them to refine the output.

It’s a technique that could improve retrieval results; for example, a document could become the direction for the retrieval model. The quality of the hypothetical document will also depend on the quality of the LLM.

For example, we can have the following prompt:

“Write a hypothetical paragraph answering {user query}. Use this text to find relevant documents.”

We can use the query as it is or add the hypothetical documents to the query we have refined using another technique. What’s important is that the hypothetical documents generated are relevant to the original query.

Generation Prompt

RAG consists of retrieval and generation aspects. The generation facet is where the retrieved context is passed into the LLM to answer the query. Many times, the prompt we have for the generation part is so simple that even if we have the relevant chunk documents, the output generated is not sound. We need a proper generation prompt to improve the RAG output.

I have previously written more about prompt engineering techniques, which you can read in the following article. However, for now, let’s explore further prompts that are useful for the generation part of the RAG system.

Explicit Retrieval Constraints

RAG is all about passing the retrieved context into the generation prompt. We want the LLM to answer the query according to the documents passed into the model. However, many of the LLM answers are explained outside of the context given. That’s why we could force the LLM to answer only based on the retrieved documents.

For example, here is a query that explicitly forces the model only to answer through the documents.

“Answer using ONLY using the provided document sources: {documents}. If the answer isn’t there, say ‘I don’t know.’ Do not use prior knowledge.”

Using the prompt above, we can eliminate any hallucination from the generator model. We limit the inherent knowledge and use only the context knowledge.

Chain of Thought (CoT) Reasoning

CoT is a reasoning prompt engineering technique that ensures the model breaks down the complex problem before reaching the output. It encourages the model to use intermediate reasoning steps to enhance the final result.

With the RAG system, the CoT technique will help provide a more structured explanation and justify the response based on the retrieved context. This will make the result much more coherent and accurate.

For example, we can use the following query for CoT in the generation prompt:

“Based on the retrieved context: {retrieved documents}, answer the question {query} step by step, first identifying key facts, then reasoning through the answer.”

Try to use the prompt above to improve the RAG output, mainly if the use case includes a complex question-answering process.

Extractive Answering

Extractive answering is a prompt engineering technique that tries to produce output using only the relevant text from passed documents instead of generating elaborate responses from it. In this technique, we can return the exact portion of the retrieved documents to ensure the hallucination is minimized.

The prompt example can be seen in the example below:

“Extract the most relevant passage from the retrieved documents {retrieved documents} that answers the query {query}.

Return only the exact text from {retrieved documents} without modification.”

Using the above prompt, we will ensure that the documents from the knowledge base are not changed. We don’t want any deviation from the document in various use cases, such as legal or medical cases.

Contrastive Answering

Contrastive answering is a technique that generates a response from the context given to answer the query from multiple perspectives. It’s used to weigh different viewpoints and arguments where a single perspective might not be sufficient.

This technique is beneficial if our RAG use cases are in a domain that requires constant discussion. It will help users see different interpretations and encourage a more profound exploration of the documents.

For example, here is a query to provide contrastive answers using the pros and cons of the document:

“Based on the retrieved documents: {retrieved documents}, provide a balanced analysis of {query} by listing:

– Pros (supporting arguments)

– Cons (counterarguments)

Support each point with evidence from the retrieved context.”

You can always tweak the prompt into a more detailed structure and discussion format.

Conclusion

RAG is used to improve the output of LLMs by utilizing external knowledge. Using RAG, the model can enhance the accuracy and relevance of the results using real-time data.

A basic RAG system is divided into two parts: retrieval and generation. From each part, the prompts can play a role in improving the results. This article explores several prompt engineering patterns that can increase successful RAG implementations.

I hope this has helped!