Feature Engineering with LLM Embeddings: Enhancing Scikit-learn Models

Image by Editor | ChatGPT

Large language model embeddings, or LLM embeddings, are a powerful approach to capturing semantically rich information in text and utilizing it to leverage other machine learning models — like those trained using Scikit-learn — in tasks that require deep contextual understanding of text, such as intent recognition or sentiment analysis.

This article briefly describes what LLM embeddings are and shows how to use them as engineered features for Scikit-learn models.

What Are LLM Embeddings?

LLM embeddings are semantically rich numerical (vector) representations of entire text sequences produced by LLMs. This notion may at first challenge some solidly foundational perceptions about text embeddings and the general purpose of LLMs, so let’s clarify a couple of points to better understand LLM embeddings:

- While “conventional” embeddings — like Word2Vec, FastText, etc. — are contextless, fixed vector representations of individual words used as input features for downstream models, LLM embeddings are typically representations of full sequences, such that the meaning of words in the sequence is contextualized.

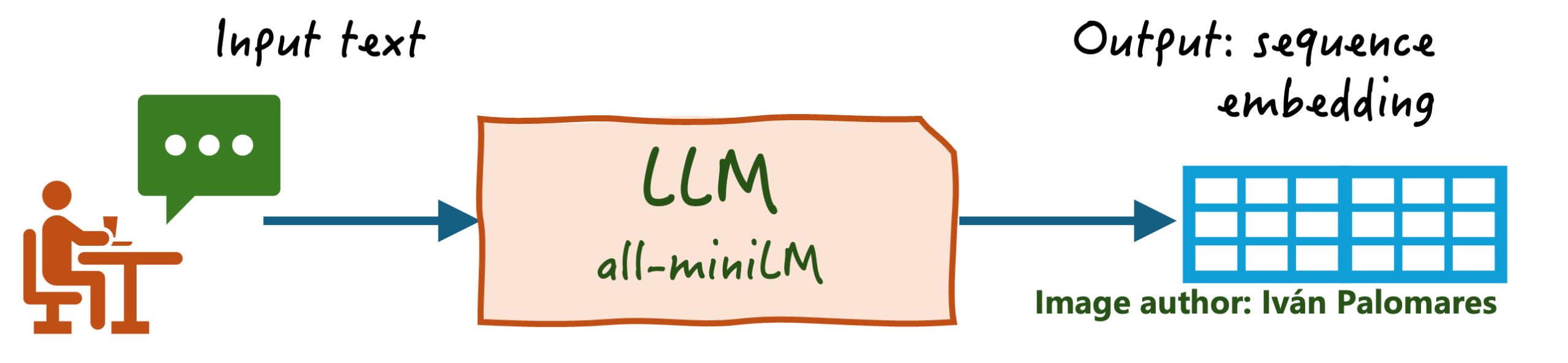

- Although LLMs normally generate text sequences as outputs, some specific models like

all-miniLMare specifically designed to produce context-enriched output embeddings, i.e. numerical representations, instead of generating text. As mentioned earlier, these output embeddings have a richer level of semantic information, making them turning them into suitable inputs for downstream models.

Using LLM Embeddings in Feature Engineering

The first step to leverage LLM embeddings for feature engineering tasks is to use a suitable LLM like those available at Hugging Face’s SentenceTransformers library, for instance, all-MiniLM-L6-v2.

The code excerpt below installs and imports the library and uses it to turn a list of text sequences, contained in a dataset attribute, into embedding representations. The dataset, available here, describes customer support tickets — enquiries and complaints — belonging to several classes, combining text alongside some structured (numerical) customer behavior features.

Let’s focus on turning the raw text feature into LLM embeddings first:

|

from sentence_transformers import SentenceTransformer import pandas as pd

url = “https://raw.githubusercontent.com/gakudo-ai/open-datasets/refs/heads/main/customer_support_dataset.csv” df = pd.read_csv(url)

# Extract columns text_data = df[“text”].tolist() structured_data = df[[“prior_tickets”, “account_age_days”]].values labels = df[“label”].tolist()

model = SentenceTransformer(‘all-MiniLM-L6-v2’) X_embeddings = model.encode(text_data) |

Now that we have taken care of the text feature in the dataset, we prepare the two numerical features describing customers: prior_tickets and account_age_days. If we observe them, we can see they move across pretty different value ranges; hence, it would be a good idea to scale these attributes.

After that, using Numpy’s hstack() function, both scaled features and LLM embeddings are re-unified:

|

scaler = StandardScaler() structured_scaled = scaler.fit_transform(structured_data) X_combined = np.hstack([structured_scaled, X_embeddings]) |

Now that this feature engineering process has been completed, all that remains is splitting the engineered dataset containing LLM embeddings into training and test sets, training a Scikit-learn model for customer ticket classification — out of five possible classes — and evaluating it.

|

# Split X_train, X_test, y_train, y_test = train_test_split(X_combined, labels, test_size=0.2, random_state=42, stratify=labels)

# Train clf = RandomForestClassifier() clf.fit(X_train, y_train)

# Evaluate y_pred = clf.predict(X_test) print(classification_report(y_test, y_pred)) |

In the above code, the sequence of events is:

- Splits the unified customer features and associated labels into training examples (80%) and test examples (20%). Using the

stratify=labelsargument here is extremely important, since we are handling a very small dataset; otherwise, the representation of all five classes in both the training and test sets would not be guaranteed - Initializes and trains a random forest classifier with default settings: no specified hyperparameters for the ensemble or its underlying trees

- Evaluates the trained classifier on the test set

This is the resulting evaluation output:

|

precision recall f1–score support

billing 0.50 1.00 0.67 2 bug 1.00 1.00 1.00 2 delivery 1.00 0.50 0.67 2 login 1.00 1.00 1.00 2 refund 1.00 0.50 0.67 2

accuracy 0.80 10 macro avg 0.90 0.80 0.80 10 weighted avg 0.90 0.80 0.80 10 |

Based on the classification report, our approach appears to be quite successful. Achieving an 80% accuracy and a weighted F1-score of 0.80 on a five-class problem with a very small dataset indicates that the model has learned meaningful patterns. This success is largely thanks to the LLM embeddings, which converted raw text into numerically rich features that captured the semantic intent of the customer tickets. This allowed the Random Forest classifier to distinguish between nuanced requests that would be challenging for simpler text-representation methods.

To truly quantify the value added, a crucial next step would be to establish a baseline by comparing these results against a model trained with traditional features like TF-IDF. Further improvements could also be explored by tuning the classifier’s hyperparameters or applying this technique to a larger, more robust dataset to confirm its effectiveness. Nonetheless, this experiment serves as a powerful proof of concept, clearly demonstrating how LLM embeddings can be seamlessly integrated to boost the performance of Scikit-learn models on text-heavy tasks.

Wrapping Up

This article highlighted the significance of LLM embeddings, that is, vector representations of whole text sequences being generated by specialized LLMs for that end, in training downstream machine learning models for tasks like classification in the presence of partly structured data that may contain text. Through easy steps, we showed how to apply feature engineering on raw text features to incorporate their semantic information as LLM embeddings for training scikit-learning models.